AI SLEEP MONITOR

A data-driven approach to the Faber sleep training method.

1. The "Why": Data-Driven Sleep Training

Sleep training is one of the most challenging aspects of early parenthood. We chose the Faber Method, which involves allowing the child to self-soothe for progressively longer intervals. However, the key to success isn't just watching the clock—it's understanding the nature of the crying.

The core philosophy is to empower kids to get back to sleep on their own. But as a parent, it's agonizing to decide when to intervene. Is the crying escalating into distress? Or is it de-escalating into settling?

I built this system to remove the guesswork. By visualizing the rate of change and intensity trends in real-time, we could objectively determine if our child was figuring it out or if they needed support, turning an emotional ordeal into a manageable, data-backed process.

2. The "How": System Architecture

Tech Stack

- Core: Python 3.9+

- Audio Input: USB Microphone via

sounddevice - Signal Processing:

scipy.signal(Butterworth filters, Peak detection) - Backend: Flask + Flask-SocketIO

- Database: SQLite (Time-series data)

- Frontend: HTML5, CSS3, Chart.js for real-time visualization

Signal Processing Pipeline

The system captures raw audio at 44.1kHz. It applies a 5th-order Butterworth lowpass filter to remove high-frequency noise. We then calculate the Root Mean Square (RMS) amplitude to determine the "loudness" of the room.

Using scipy.signal.find_peaks, the

system identifies distinct cry events versus ambient

noise. These peaks are analyzed for prominence and width to distinguish between a

"whimper" and a full "cry."

State Machine Logic

The system doesn't just record volume; it determines the state of the room based on thresholds and time windows:

Level 1: Whimper

Low-intensity sounds. Often indicates self-soothing or light settling.

Level 2-3: Crying

Mid-range intensity. This is the active training zone where we monitor for escalation.

Level 4: Distress

High-intensity, sustained volume. Immediate indicator that intervention might be needed.

A cumulative counter tracks these events over a rolling window. If the "Level 1" count exceeds a threshold, the status shifts to Settling. If "Level 2-4" counts spike, it shifts to Awake/Crying. If the room remains below the noise floor for 10 seconds, it transitions to Sleeping.

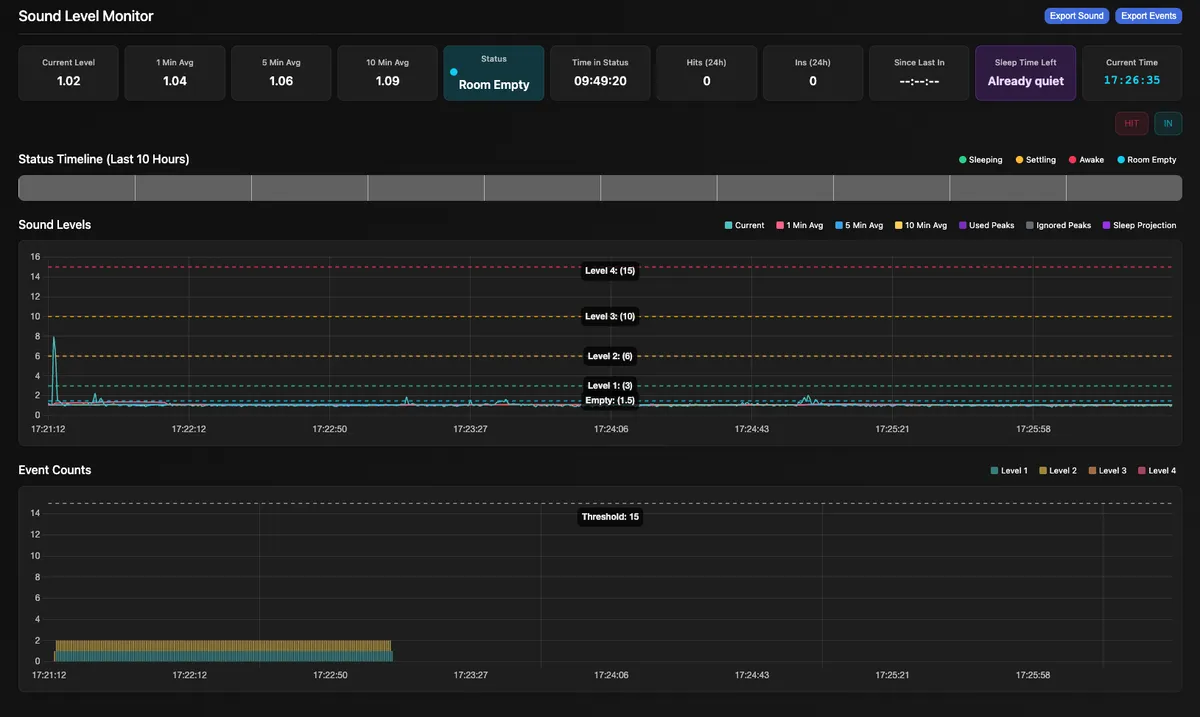

3. Real-Time Visualization

The frontend connects via WebSockets to receive updates every second. It renders a live dashboard showing:

- Instantaneous Volume: The raw feed of the room.

- Moving Averages: 1-minute, 5-minute, and 10-minute trend lines. This is crucial for seeing if the overall volume is trending down even if there are momentary spikes.

- Sleep Projection: A linear regression model uses recent peak data to estimate "Time to Sleep"—predicting when the room will return to silence based on the current decay rate of the crying.

This projection feature was a game-changer, giving us a concrete "light at the end of the tunnel" during tough nights.